Don’t fight with the Dude. (Round 1)

Prelude

I’m a big fan of tools that help to make my life easier. But, tools that give you almost everything you need but fall short in a critical area are a big source of frustration. Visual Studio for Database Developers aka DataDude is one such tool. DataDude is great for allowing a user to get the database schema into files so that they can be source controlled. If you’re not using source control, of some kind, on your database you’re doing your employer a disservice. I like having each database object in a file so that I can see how it has evolved over time using a combination of SubVersion and WinMerge. DataDude also provides the capability to generate data for a database using a variety of data generators, from string generation to regular expressions. This is extremely useful if you want to do unit testing or load testing against your database to see how an application is going to perform when data volumes increase.

However DataDude data generation falls short in one key area, static data or lookup tables. This is data which is constant and the shape and type of data is critical to application execution, possibly represented in code as enumerations. If you want to generate data for a table which has a foreign key to a lookup table DataDude wants to generate the data for the lookup table also.

There are several threads on the dbpro forums which discuss options to work around this problem. I’ve found that the best option is to not fight the Dude. My solution is to let the Dude generate the data, then remove all the foreign key constraints and replace the generated data with my own data and then re-enable the constraints. Let’s take a look at the process.

The Setup

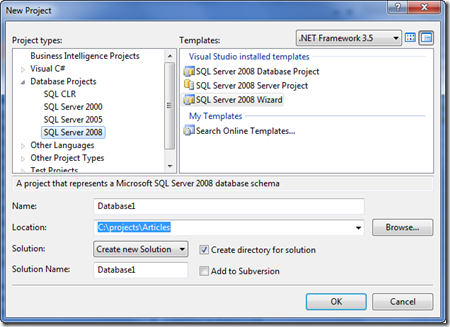

First, you’ll need a DbPro project. There’s a wizard to guide you through the process just choose the version appropriate for your project.

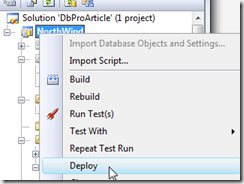

Once you’re through the process you’ll end up with a somewhat familiar view which should contain all the objects in your database. This sample reverse engineered the NorthWind database.

In this example I will use the Northwind Categories and Region tables to represent the static data tables. Don’t put the insert statements in the Script.PostDeployment.sql, there seems to be an issue with how Identity fields get assigned from the generated data when data already exists in the tables; so you want to start with clean tables before running the data generation process.

Create a file(s) which will contain your scripted data I just use one file but you can use multiple files and I also add a delete from <table> before each set of inserts. (I’ll explain why you can’t use truncate a bit later) You won’t need to worry about the order of insertion because the constraints will be disabled when the inserts are run and then enabled after the inserts. I use SetCheckConstraints (which is a slightly modified version of disable_fk_constraints.txt ) script to handle the enabling and disabling of constraints in the database.

The next step is to create the data generation plan. If you right click on the project and select the Add menu then select Data Generation Plan this will add a new file type to the project and open up a window listing out the tables in the project, columns and a preview of the data which will be generated for the currently selected table.

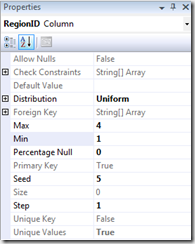

There are several options for generating data and depending on the SQL type you will have options from generating random data to data generated according to a regular expression. For the purpose of this article we’ll just be working with the Integer generator, if you have other fields in your table, as in the example above there is a RegionDescription field, just use the defaults remember we’ll be removing all of the rows after the generation process. The data generation process will only be used to set up the foreign key associations, the actual content of these rows does not matter.

For each of the lookup tables set the number of Rows to Insert equal to the number of rows you have in your scripted data, the above example has four (4) rows in the Region table and eight (8) rows in the Categories table (not shown). Set the rest of the Data Generation Plan to meet your specific needs. I would suggest starting with a smaller number of rows initially until you are familiar with the generation process, creating millions of rows can be done but it does take some time.

If your table uses an Identity(1,1) you won’t be able to set any properties on the primary key column as in the case of the Categories table, the primary key column will be set to SQL Computed Value and as long as all of the values to be inserted are sequential and begin with one (1) this should be just fine.

This creates a SQL script file in the sql\{Configuration} folder which you can run to create a new database, viewing the Output Window will show the exact location of the file, see the highlighted area below.

Open this file in SQL Server Management Studio (SSMS). The first thing you should notice is that it is not a normal SQL script file. It is a SQLCMD file and you’ll need to switch the SQL Query mode in order for SSMS to parse the file correctly.

At the top of the file there will be two setvar commands the first will be the name of the database which will be created, the other will be the path for the database files, set these variables appropriately then hit F5 to generate the database.

GO

:setvar DatabaseName "Test_NorthWind"

:setvar DefaultDataPath "c:\MSSQL\"

You may have issues with the database creation if the file names already exist. I usually change the create database lines to use the $(DatabaseName) variable for the names of the files see line numbers 6 and 7 below.

1: GO

2: PRINT N'Creating $(DatabaseName)...'

3: GO

4: CREATE DATABASE [$(DatabaseName)]

5: ON

6: PRIMARY(NAME = [Northwind], FILENAME = '$(DefaultDataPath)$(DatabaseName).MDF', MAXSIZE = UNLIMITED, FILEGROWTH = 10 %)

7: LOG ON (NAME = [Northwind_log], FILENAME = '$(DefaultDataPath)$(DatabaseName)_log.ldf', MAXSIZE = UNLIMITED, FILEGROWTH = 10 %)

8: GO

Review the output for any errors and correct them as necessary. Once the database has been successfully created run the data generation process. Return to Visual Studio and open the Data Generation Plan if it is not open and from the Visual Studio menu select Data/Data Generator/Generate Data to begin the process this will prompt for a database connection, select a new connection and connect to the database created above. The next prompt asks about deleting existing data, select Yes since we just created the database. You should receive a Data generation completed window with all green status indicators, if not review the Output window for issues and re-run the process.

Take look at what has been created.

Four rows have been inserted into Region and eight rows into the Categories table with a bunch of random strings and additional data. The thing to note here is that the ID columns are sequential values matching the values we have in our scripted data.

The Knockout

Here’s where instead of fighting with the Dude, we’ll embrace the foreign key constraints put in place and just replace the data in the lookup tables with the data in the data scripts. The first step is to remove the constraints in the database using the SetCheckConstraints procedure. If you included this procedure in your project it will already be available, otherwise download the code and execute it to create the procedure. SetCheckConstraints needs to be run before and after the insertion of our data. The first time we need to disable the constraints so run with an argument of @Enabled set to 0 and after the data is inserted run with an argument of @Enabled set to 1.

exec SetCheckConstraints @Enabled=0This will disable the foreign key constraints, this does not remove the constraints it just prevents SQL from checking them allowing us to remove all the data and replace it with our own. Above I said to use delete from table rather than truncate table this is why, since the constraints are not actually removed from the database SQL will not allow you to truncate the table, but you can still delete all the rows. Running with a @Enabled=1 will re enable the constraints.

Open the scripted data file in SSMS (in this data file I have included the exec SetCheckConstraints command before and after the insertion of data) and execute the file, then review the tables again.

There with the same ID’s generated by the Data Generation Plan is the data the application needs to function correctly.

Summary

- Put all of the database objects into a DbPro project.

- Create a Data Generation Plan and modify the lookup table generation plan to conform to the application data constraints.

- Let the Dude generate the data.

- Drop the foreign key constraints

- Replace the Dude’s data with your own.

- Enable the foreign key constraints.

If you don’t fight with the Dude, “the dude abides.”

In a follow-up post I’ll discuss how to work around various business rules your application is likely to enforce but automatic data generation will not set correctly.

I hope you have enjoyed my first blog post please feel free to comment below and I will attempt to address any issues raised.